Translation Quality Assurance Side-by-Side Expert Review

Translation Quality Assurance: Compare Original and Translated Text Side-by-Side

Estimated reading time: 8 minutes

- Side-by-side bilingual comparison is the most reliable way to validate accuracy, consistency, and cultural fit.

- Combine automation and human review: tools catch mechanical errors quickly; humans catch nuance and context.

- Use structured frameworks (MQM, SAE J2450, LISA, DQF) to classify and score errors consistently.

- Error annotation + weighted scoring helps prioritize fixes and measure improvement over time.

- Integrate QA across the workflow—pre-translation, during translation, and post-translation checks prevent costly rework.

Why Side-by-Side Comparison Forms the Foundation of Translation QA

I've worked in localization for over a decade, and when it comes to quality, nothing matches placing source and target text next to each other. Bilingual comparison gives reviewers a direct line of sight between intent and output so they can answer the question: does this segment truly reflect what the source communicates?

This method reveals omissions, additions, and subtle meaning shifts that automated checks often miss. I once reviewed a technical manual where safety warnings had been paraphrased into vague suggestions — a critical failure only detectable via bilingual comparison.

Trade-offs: it's resource-intensive and requires skilled linguists fluent in both languages, subject matter knowledge, and cultural competence. For high-stakes content, however, there's no real shortcut to the assurance this approach provides.

Understanding Quality Frameworks That Structure Your QA Process

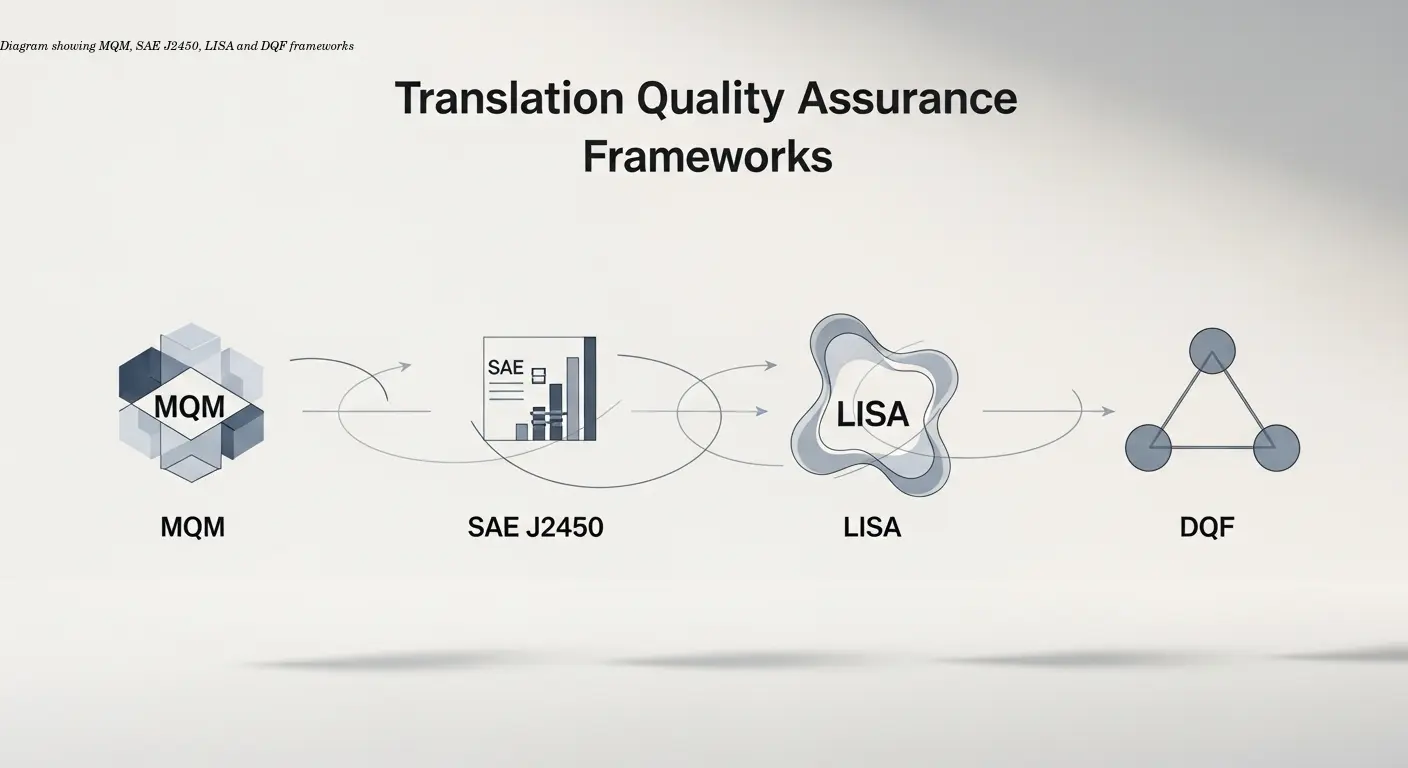

QA is more than typo-hunting; it needs reproducible frameworks so teams can categorize and prioritize errors consistently. Below are frameworks I use and recommend depending on content type.

Multidimensional Quality Metrics (MQM)

MQM breaks quality into dimensions like accuracy, fluency, terminology, style, and cultural appropriateness. It's especially useful for training reviewers because it provides concrete categories rather than subjective impressions.

SAE J2450

Originating in automotive localization, SAE J2450 focuses on specific error types—incorrect terminology, grammatical errors, omissions, agreement issues, typos, and punctuation. Its weighted scoring helps distinguish minor vs. critical problems, which is essential for technical content where precision matters.

LISA QA Model

LISA targets software localization and organizes errors into language, format, and function. It recognizes that a translation must work in the interface as well as read naturally—a perfectly translated label that breaks the UI is still a failure.

Dynamic Quality Framework (DQF)

DQF is flexible and customizable, making it suitable for large-scale, multi-language projects. It integrates well with workflows and allows teams to adapt error categories and weighting to project needs.

These frameworks provide defensible quality measurements you can report to stakeholders and use to track improvement over time.

How Manual Evaluation Captures What Machines Miss

Skilled human reviewers detect contextual and cultural issues that machines still struggle with. A translation might be grammatically correct yet wrong in register or tone. Literal translations of cultural references may be technically accurate but meaningless or offensive in the target market.

Machines can score a translation highly while human evaluators spot that the tone is entirely misplaced for the audience.

Consistency in manual evaluation is a challenge—subjective criteria like style vary between reviewers. Combining human review with structured frameworks reduces variability while preserving human judgment.

Leveraging Automated QA Tools for Consistent Quality Checks

Automated QA integrated into CAT and TMS platforms dramatically speeds up detection of mechanical issues: inconsistent translations of the same term, missing tags, number mismatches, and formatting violations. Tools can scan thousands of segments in minutes and apply terminology rules against glossaries.

Examples include platforms and tools that run real-time checks while translators work. Some advanced systems use AI to flag potentially semantically different segments. But be aware: automation works on patterns and rules, not true understanding. It can approve translations that are semantically wrong and flag valid alternatives as errors.

Metric limitations: BLEU, METEOR, and ROUGE measure similarity to references, not contextual quality. Combine automated checks for speed with human review for semantic verification.

Building an Effective Translation QA Workflow

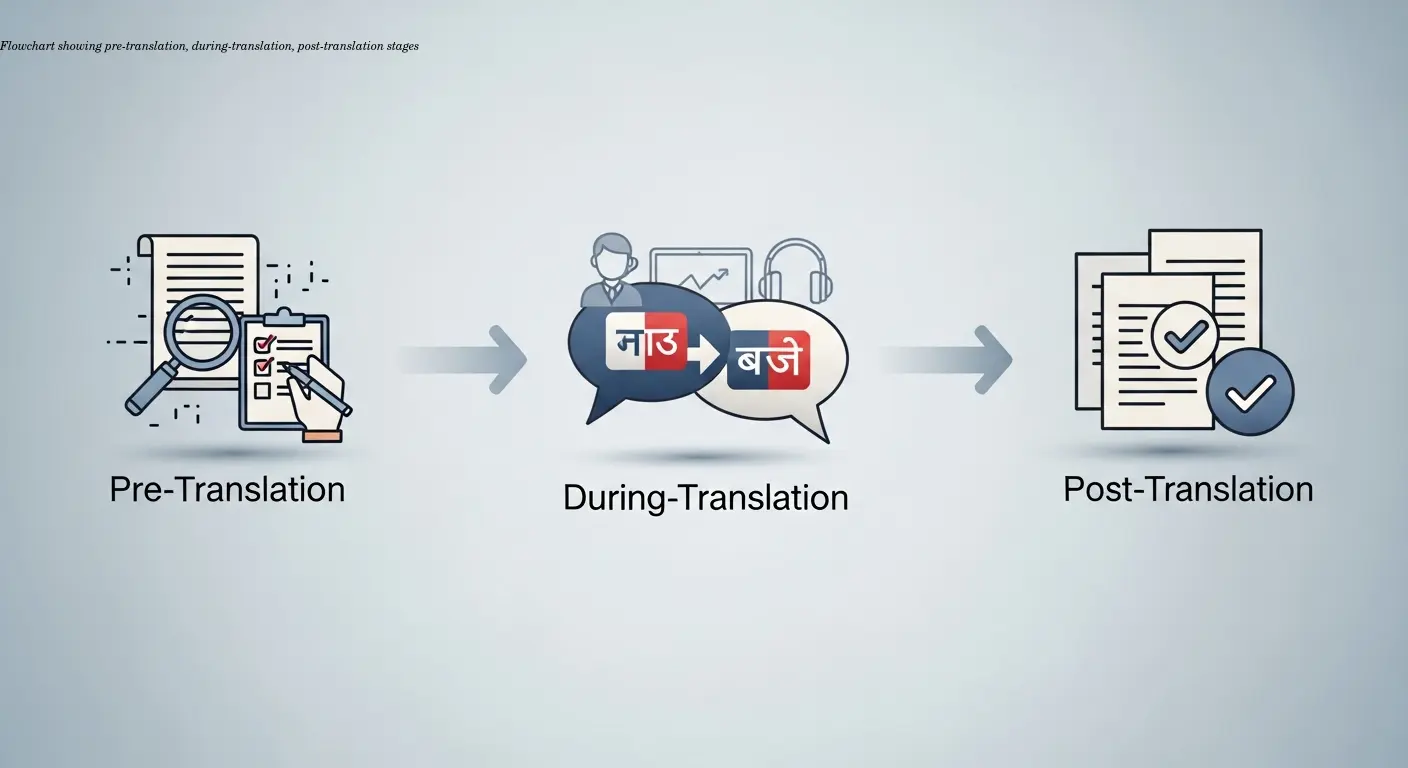

QA works best when integrated across three stages: pre-translation, during translation, and post-translation. Each stage catches different issues at the point where they’re easiest to fix.

Pre-translation QA

Set projects up for success: validate source files, confirm reference materials, and ensure terminology databases are current. Skipping this step can create expensive upstream errors.

During-translation QA

Modern CAT tools provide real-time warnings for terminology violations, TM inconsistencies, and formatting issues. Immediate feedback reduces rework by letting translators address problems instantly.

Post-translation QA

This is where comprehensive bilingual comparison happens: reviewers apply frameworks, automated checks run full suites, errors are tagged and scored, and quality reports are generated for stakeholders.

Treat QA as continuous rather than a final gate—prevention is faster and cheaper than correction.

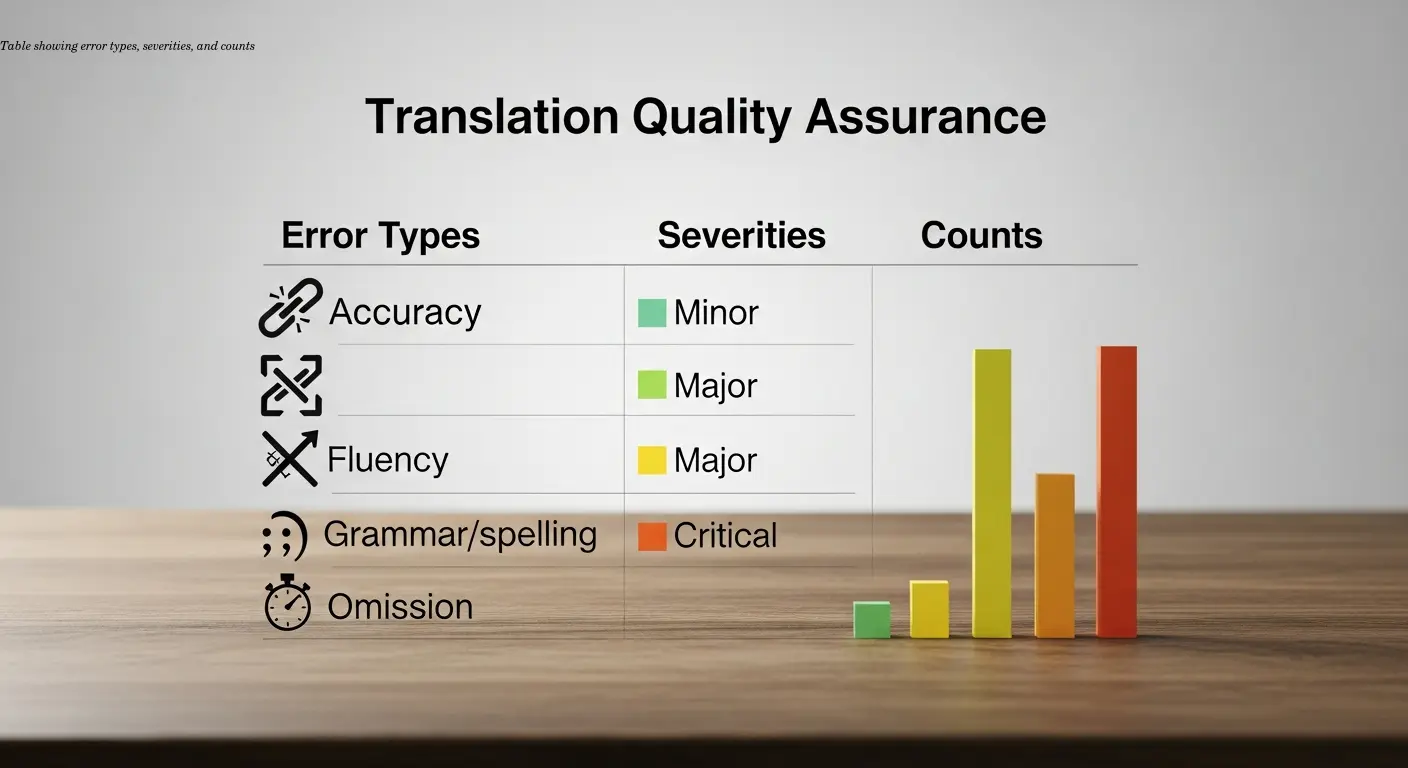

Practical Error Annotation and Quality Reporting

Error annotation turns qualitative problems into structured data. Reviewers categorize each issue, assign severity, and add context. This helps translators learn, supports targeted training, and produces metrics to track quality trends.

Severity systems typically include critical, major, minor, and preferential classifications. Use these to prioritize fixes—critical errors render content unusable or dangerous, while preferential issues are stylistic choices.

Use metrics wisely: metrics should diagnose and guide improvements, not be gamed. Document decisions and avoid letting scores replace professional judgment.

Combining Human Expertise With Automation for Best Results

The best quality workflows combine both approaches: automation for scale and consistency, humans for nuance and judgment. For many projects this means running automated checks first, then focusing human reviewers on flagged or high-stakes segments.

I worked on a pharmaceutical localization where automation validated terminology and formatting across 50,000 segments, while humans focused on safety information and patient-facing text. The combined strategy kept quality high while controlling time and cost.

Match the method to the task: don't waste human time on what machines do well, and don't expect automation to catch meaning-level issues.

FAQ

What's the difference between translation QA and LQA?

Translation QA and LQA (Linguistic Quality Assurance) are essentially the same thing—LQA is just a more specific term emphasizing the linguistic aspects of quality checking. Both involve comparing source and target texts to verify accuracy, consistency, and appropriateness. Some organizations use LQA when they want to distinguish linguistic review from technical QA like file formatting or functionality testing.

How long should translation QA take?

It depends on content complexity, length, and required quality level. As a rough guideline, comprehensive manual QA typically takes about 20-30% of the original translation time. So if translation took 10 hours, expect 2-3 hours for thorough QA. Automated checks run much faster—often minutes—but you still need human review time for flagged issues. Critical content like legal or medical documents may require more extensive review.

Can I do translation QA if I only speak the target language?

Not really, no. Effective translation QA requires bilingual comparison—you need to verify that the target text accurately reflects the source text. Monolingual review (checking only the target language) can catch some errors like typos, grammar mistakes, or unnatural phrasing, but it will miss mistranslations, omissions, and additions. For quality assurance rather than just proofreading, bilingual competence is essential.

What QA tools integrate with Trados or MemoQ?

Most major CAT platforms include built-in QA functionality and also support third-party QA tools. Xbench is a popular standalone QA tool that works with Trados, MemoQ, and other CAT platforms. ApSIC Xbench and ErrorSpy integrate well with multiple systems. Many cloud-based TMS platforms like Phrase, Smartcat, and Memsource have integrated QA features that run automatically within the translation environment.

How do I choose which QA framework to use?

Match the framework to your content type and industry. MQM offers flexibility for most content types and is increasingly becoming an industry standard. SAE J2450 works well for automotive and technical content. LISA QA Model is designed for software localization. DQF provides customization for large, complex projects. Many organizations create their own frameworks based on these standards but adapted to their specific needs and priorities.

Should I do QA before or after editing?

Both, ideally. Light automated QA during translation catches obvious errors early. More comprehensive QA should happen after editing/review but before final delivery. Some workflows include a QA check between translation and editing to catch major issues before the editor starts work. The goal is catching and fixing errors at the stage where correction is easiest and least expensive.

What's an acceptable error rate in translation QA?

This varies enormously by content type and client requirements. For general content, you might target less than 1-2 errors per 1000 words. For critical content like medical or legal translations, even that might be too high. Some enterprise clients specify exact error thresholds in their contracts (like 0.5 major errors per 1000 words). Focus less on arbitrary numbers and more on whether the translation achieves its intended purpose for its audience.

How do I handle disagreements about QA findings?

This is where clear frameworks and severity definitions really help. When reviewers and translators disagree about whether something is an error, refer back to your established criteria and client specifications. Document the reasoning behind decisions. For subjective style issues, consider whether the change genuinely improves quality or is just personal preference. Many teams have a senior linguist or QA lead who makes final decisions on disputed issues. The goal is consistency, not perfection according to one person's taste.

Related Articles

Building an Autonomous AI News Agent with n8n & Bright Data (Part 1)

Learn how to build an autonomous AI-powered news scraper using n8n and Bright Data. Part 1 covers the Ingestion Engine architecture for 24/7 market monitoring.

Practical content migration guide for CMS comparison

Practical content migration advice to compare old and new CMS, validate data integrity, and avoid SEO loss. Best practices for developers and content teams.

Collaborative Writing Guide to Comparing Team Contributions

Compare team contributions with collaborative writing tools, clear roles, and peer assessment—practical tactics content teams can apply now.