A-B testing content tactics for growth marketers

A/B Testing Content: How to Test Copy That Converts

Estimated reading time: 8 minutes

Key takeaways

- A/B testing content lets you compare copy variations scientifically by measuring which text performs better with your audience.

- Prioritize high-impact elements like headlines, CTAs, and subject lines; test one variable at a time to isolate effects.

- Statistical significance matters: run tests long enough to gather meaningful data and avoid premature decisions.

- Document hypotheses and results and focus testing on highest-volume communications to maximize ROI.

- Build a continuous testing culture — iterate, record learnings, and use winners as new controls.

Table of contents

- Understanding A/B Testing Content and Its Impact

- What Copy Elements Should You Test First

- Building Your A/B Testing Framework

- Running Tests That Actually Work

- Copy Variations That Drive Conversion Optimization

- Best Practices for Testing Marketing Copy

- Common Pitfalls and How to Avoid Them

- FAQ

Understanding A/B Testing Content and Its Impact

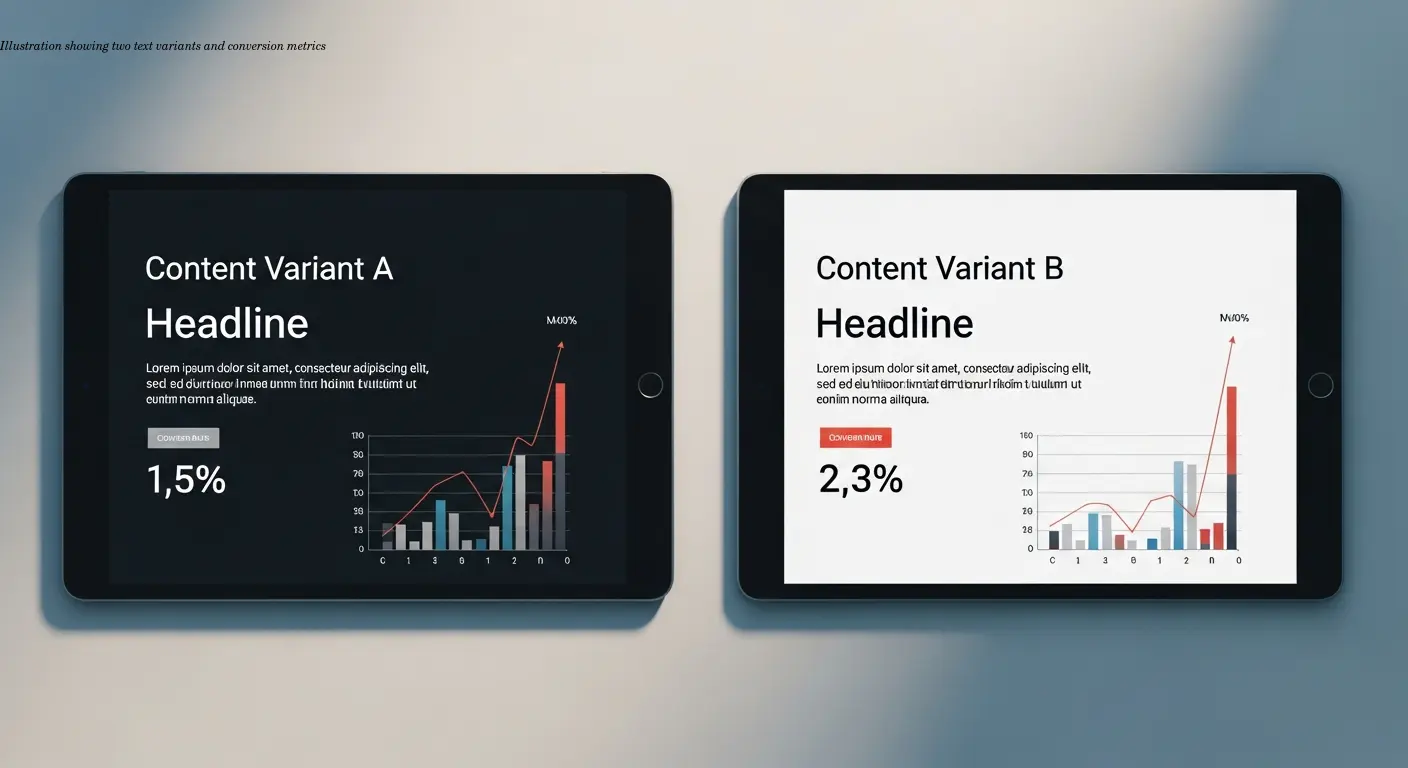

A/B testing content is a scientific method for determining which words resonate best with your audience. You create two versions of the same marketing copy, present them to similar groups of users, and measure which one produces better results. While the concept is simple, the impact on conversion optimization can be substantial.

The core purpose of text A/B testing is to remove guesswork. Instead of relying on gut instincts about whether a headline should read "Get Started Today" or "Start Your Free Trial," you test both and let real user behavior provide the answer. This data-driven approach enables growth marketers to make incremental improvements that compound over time.

The power of A/B testing comes from isolation: you test one specific piece of copy while keeping everything else constant. When performance differs, you can attribute the change to that one variation. For example, a well-executed headline test on a landing page might reveal that action-oriented language increases click rates by 15%—a concrete insight you can act on.

For growth teams, A/B testing content turns hope into knowledge. Each completed experiment builds a compounding knowledge base about what resonates with your unique audience, guiding future campaigns and messaging decisions.

What Copy Elements Should You Test First

Not all copy elements are equal. Some are quick wins with large impact; others require more effort for smaller gains. Prioritize items that directly influence user decisions and are high-traffic.

Headlines and subject lines

These are often the first words users see and determine whether someone opens an email or continues reading a page. Test different lengths, tones, and value propositions—I've seen single-word changes in subject lines lift open rates by 20% or more.

CTA button copy

Your CTA is the moment of conversion. Small changes—"Submit" vs. "Get My Free Guide"—can produce large shifts in clicks. Experiment with urgency ("Buy Now" vs. "Shop Today"), value ("Start Free Trial" vs. "Try Free for 30 Days"), and action framing.

Body copy and product descriptions

These impact persuasion but are often subtler. Test long-form vs. short-form, bullet points vs. paragraphs, and benefit-focused vs. feature-focused language. These tests take longer to yield clear winners but are valuable for understanding information processing.

Tip: For email campaigns, start with the messages you send most frequently—weekly newsletters or abandoned cart reminders—because they reach more users and produce faster learnings than seasonal campaigns.

Building Your A/B Testing Framework

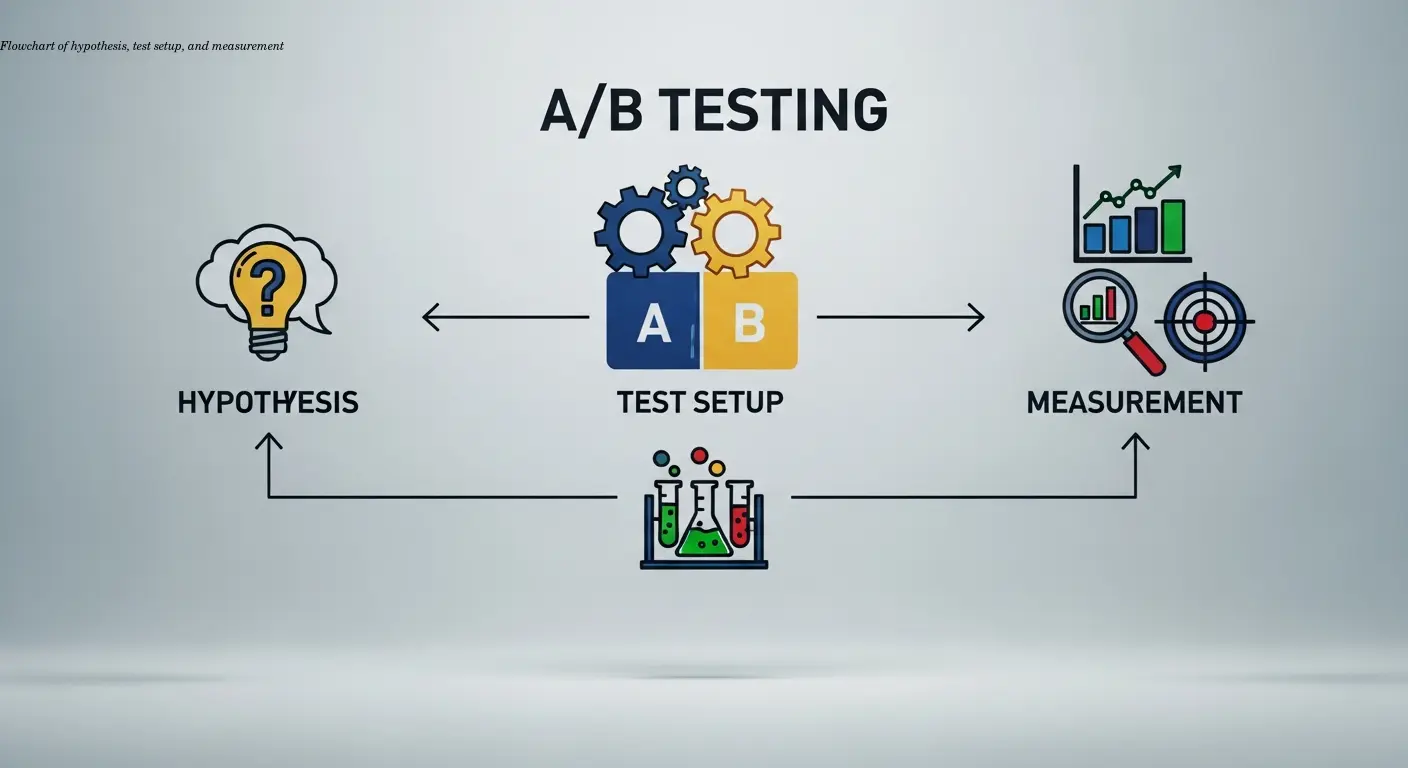

A structured framework keeps tests meaningful and ensures learnings accumulate. Without it, experiments become random and don't drive consistent improvement.

Set specific, measurable goals

Don't say "improve performance." Define the metric and target: increase email open rate by 10%, lift landing-page conversion from 3% to 4%, or raise CTA click-through by 15%. Your goal determines which metric you track.

Develop a clear hypothesis

A hypothesis forces you to articulate why you expect a result. Example: "Using first-person language ('Start My Free Trial') will increase CTA clicks by 12% compared to second-person ('Start Your Free Trial') because it creates stronger ownership." Documenting your rationale helps you learn whether your assumptions hold.

Isolate one variable

If you change headline, body, and CTA at once, you won't know which change caused results. Keep everything identical except the tested element to obtain actionable insights.

Create meaningful variations

Avoid tiny tweaks that are unlikely to move metrics. "Get Started" vs. "Get Started Today" might be too subtle; contrast "Get Started Today" with "Claim Your Free 30-Day Trial" for strategic differences that can drive behavior.

Running Tests That Actually Work

Execution is where many tests fail. Proper setup and discipline determine whether your results are trustworthy and actionable.

Test both versions simultaneously

Run variations at the same time to avoid time-based bias. Most testing platforms randomize assignments so both versions experience identical external conditions.

Run tests long enough to reach statistical significance

Avoid impatience. Checking results after 50 visitors invites random noise. Sample size depends on your baseline conversion rate and the lift you aim to detect, but plan for hundreds or thousands of users per variation. As a rule of thumb, run tests for at least one full business cycle (7–14 days) to account for day-of-week effects.

Avoid external influences

Don't run tests during major promotions, press coverage spikes, or site issues. External events can overwhelm the signal from your copy changes.

Document everything obsessively

Record hypothesis, test setup, timeline, and interpretation. Documentation preserves institutional knowledge and prevents duplicative tests.

Copy Variations That Drive Conversion Optimization

With a framework in place, explore variation strategies that commonly move the needle. Different messaging appeals to different segments—systematic testing reveals which resonates with your users.

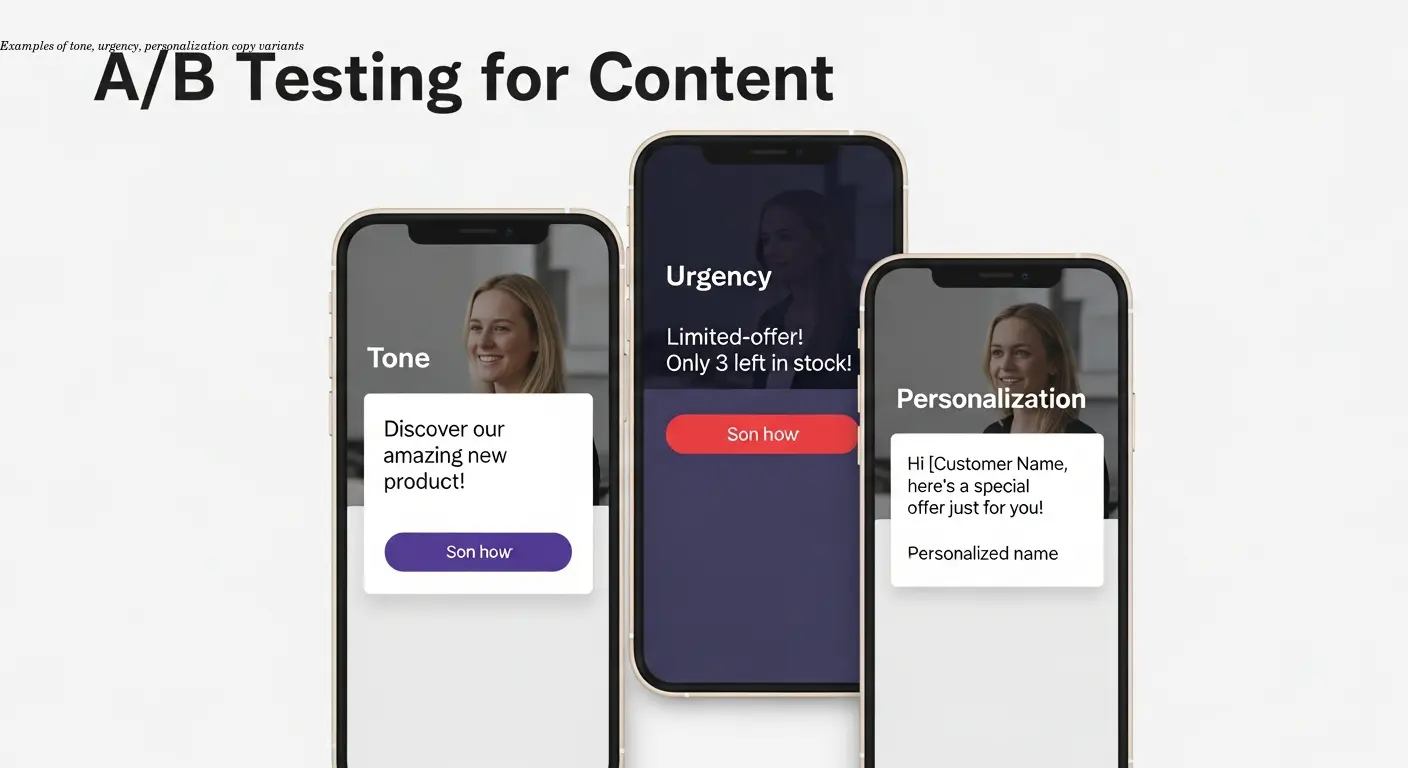

Tone and voice testing

Some audiences respond best to casual, friendly language; others prefer formal, professional copy. For a B2B SaaS client, a "friendly expert" tone outperformed corporate-speak and increased demo requests by 18% among IT decision-makers.

Urgency and scarcity language

Real urgency ("Only 5 spots left") can boost conversions. Avoid manufactured scarcity—users detect manipulation. Genuine scarcity and real deadlines tend to perform better.

Benefit-focused vs. feature-focused

Features tell what the product does; benefits tell users what they gain. Technical audiences may prefer features, while business buyers often respond to benefits. Test to learn which approach suits each persona.

Personalization vs. generic messaging

Personalized subject lines (names, company data) and behavior-driven messages ("You left items in your cart") generally improve engagement. The magnitude varies by channel and audience, so measure the lift for your recipients.

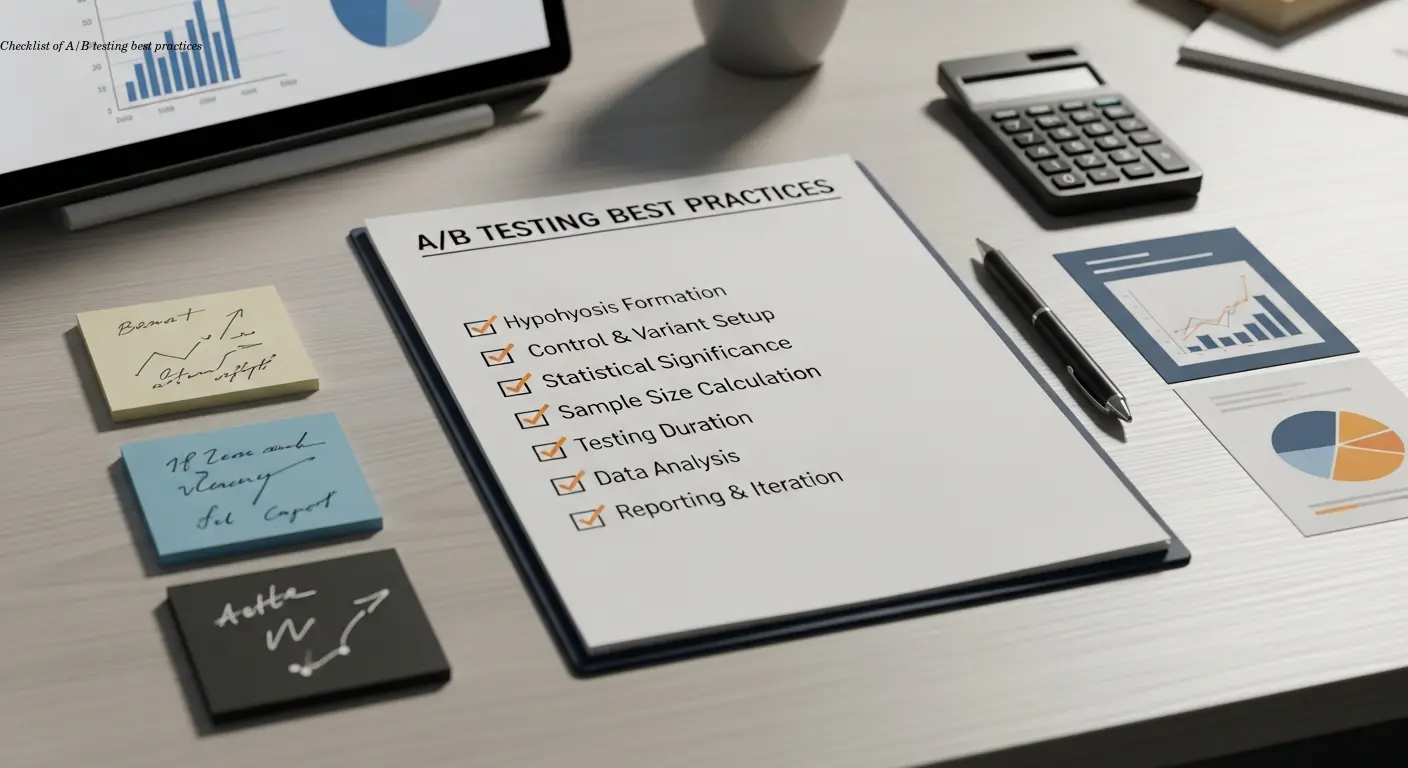

Best Practices for Testing Marketing Copy

Successful testing programs share common habits. Adopt these to maximize your return on testing investment.

- Stay goal-focused. Each test should tie to a business objective—revenue, retention, acquisition cost, or engagement.

- Maintain strict control variables. Keep the control as your baseline and change only the tested element.

- Make testing continuous. Run new experiments as old ones finish to compound learning and adapt to changing preferences.

- Share insights broadly. Publish results in a central repository so headlines that work can inform emails, ads, and social copy.

- Test progressively. Use winners as new controls and build incremental improvements over time.

Common Pitfalls and How to Avoid Them

Even experienced teams make avoidable mistakes. Recognize these pitfalls and design your experiments to prevent them.

Testing too many variables simultaneously

Changing multiple elements at once makes it impossible to know which change caused any improvement. Commit to single-variable tests to produce actionable lessons.

Calling tests too early

A temporary lead after a day is not a victory. Statistical significance exists to protect you from random variation—use proper sample-size calculators and avoid premature decisions.

Ignoring statistical significance

Picking the "winner" without sufficient data is guessing with extra steps. Use platforms that calculate confidence automatically or learn the basics of p-values and confidence intervals.

Testing during abnormal conditions

Don't run tests during Black Friday, product launches, or during technical issues. Abnormal conditions introduce noise that masks real effects.

Failing to consider segment differences

A variation might win overall but fail for specific segments. Always segment results by traffic source, campaign, device, or persona to understand nuanced performance.

FAQ

How long should I run an A/B test on my content?

Run tests until you reach statistical significance, which typically requires atleast 7-14 days and hundreds to thousands of visitors per variation. The exact duration depends on your traffic volume and baseline conversion rate. Low-traffic sites need longer test periods than high-traffic sites. Don't stop tests early just because one version is ahead—you need enough data to be confident the difference is real rather than random chance.

Can I test more than two variations at once?

Yes, you can run A/B/n tests with multiple variations, but start with simple A/B tests first. Testing multiple variations simultaneously requires larger sample sizes and longer test durations to reach statistical significance. For most situations, testing one variation against a control is faster and easier to analyze. Once you've built testing experience, you can explore multivariate approaches.

What's the minimum sample size for reliable A/B testing results?

There's no universal minimum—it depends on your baseline conversion rate and the size of improvement you're trying to detect. Generally, you want atleast 100-350 conversions per variation to detect moderate improvements. Use online sample size calculators that account for your specific metrics. For low-conversion events, you might need thousands of visitors in each variation.

Should I test email subject lines or landing page copy first?

Test whichever has higher volume and more direct business impact. Email subject lines are great starting points because they're easy to test, show results quickly, and directly affect open rates. Landing page copy often has more impact on revenue but requires more traffic and longer testing periods. Start with subject lines to build testing skills, then expand to landing pages.

How do I know if my test results are statistically significant?

Most A/B testing platforms calculate statistical significance automatically and show you a confidence level (typically you want 95% or higher). This means there's only a 5% chance the difference your seeing is due to random variation rather than a real effect. If you're manually calculating, you'll need to understand p-values and confidence intervals, or use online significance calculators designed for conversion testing.

What if both variations perform about the same?

This happens more than you'd think and it's actually valuable information. It tells you that particular copy variation doesn't matter to your audience, so you can focus testing efforts elsewhere. It might also mean your variations weren't different enough to produce measurable effects. Document the null result and move on to testing a different element or creating more distinct variations.

How often should I test my marketing copy?

Continuously if possible. User preferences change, markets evolve, and competitors shift their messaging. Run tests regularly on your highest-impact copy elements—monthly for email campaigns, quarterly for major landing pages. Build testing into your workflow rather than treating it as a special project. The most successful growth marketers always have atleast one test running.

Can A/B testing work for small websites with limited traffic?

Yes, but you'll need patience and strategic focus. Small sites should test high-impact elements (like email subject lines or main CTA copy) where even limited traffic can produce meaningful results over time. Consider testing more dramatic variations that are easier to detect with smaller samples. You might also extend test durations to several weeks or months to accumulate sufficient data.

Related Articles

Building an Autonomous AI News Agent with n8n & Bright Data (Part 1)

Learn how to build an autonomous AI-powered news scraper using n8n and Bright Data. Part 1 covers the Ingestion Engine architecture for 24/7 market monitoring.

Practical content migration guide for CMS comparison

Practical content migration advice to compare old and new CMS, validate data integrity, and avoid SEO loss. Best practices for developers and content teams.

Collaborative Writing Guide to Comparing Team Contributions

Compare team contributions with collaborative writing tools, clear roles, and peer assessment—practical tactics content teams can apply now.