A-B testing content that boosts conversions for growth

A/B Testing Content: Compare Different Copy Variations That Actually Convert

Estimated reading time: 12 min read

- A/B testing content systematically compares copy variations to identify which performs best with real users.

- Test only one variable at a time and run tests long enough to reach statistical significance.

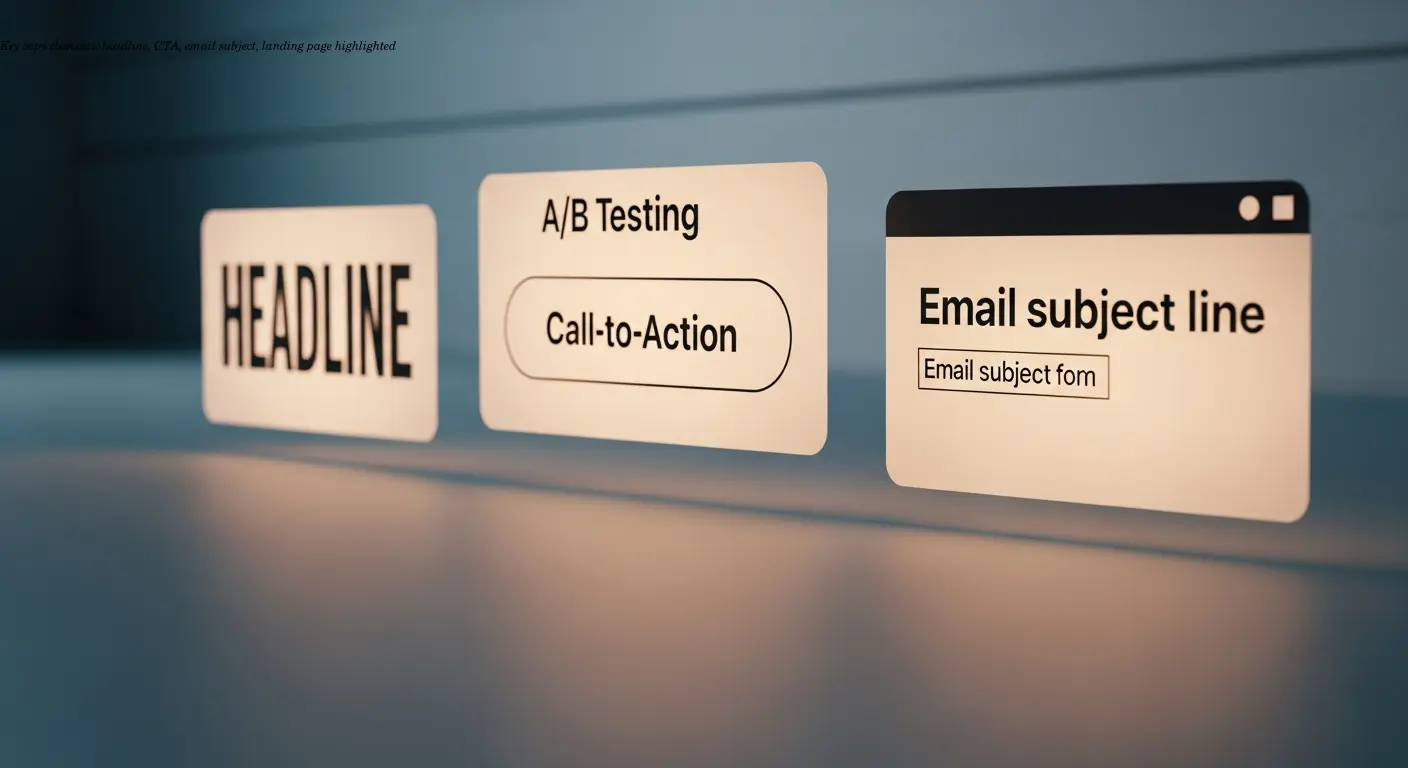

- Prioritize high-impact areas—headlines, CTAs, email subjects, landing page copy—for the biggest conversion lift.

- Document every test, use proper technical SEO safeguards (302s, canonical tags), and keep iterating.

Understanding A/B Testing Content and Why It Matters

A/B testing content is the practice of creating different versions of your marketing copy and measuring which one gets better results from real users. It's a controlled experiment: change one piece of text—sometimes a headline, sometimes a CTA or an email subject line—and observe how people respond.

Many growth marketers skip testing because they think they "know" their audience. The data often disagrees. For example, one campaign tested a features-focused product description against a benefits-focused version; the benefits copy won by 34%, and that insight reshaped subsequent messaging.

The power of text A/B testing is removing guesswork. Instead of relying on opinions or general best practices, you collect performance data from actual users. This method applies across websites, emails, ads, landing pages, and product descriptions.

Small improvements compound. Improve a headline by 15%, a CTA by 10%, and body copy by 8%—those gains multiply across thousands or millions of visitors and form a conversion optimization engine that outperforms competitors who are still guessing.

The Core Elements You Should Be Testing

Start with the elements that impact attention and action most directly. Below are the common places to begin:

Headlines and Subheadings

Headlines are usually the first place to start—if the headline doesn't capture attention in a few seconds, nothing else matters. Test length, emotional tone, and value propositions. Your headline is the gatekeeper; experiment with different hooks and benefit statements.

CTA Buttons

CTA text deserves more attention than many marketers give. A swap from "Buy Now" to "Get Started" might seem minor but can impact click-throughs significantly. Start with text, then iterate on color, size, and placement. Compare urgency-driven copy vs. benefit-driven copy to see what motivates your audience.

Email Subject Lines

Subject lines are among the most-tested elements because open rates depend on them. Test personalization, lengths, questions vs. statements, and emojis vs. plain text. Segment your audience—what works for one group may fail for another.

Landing pages and product descriptions also require systematic testing: headline, subheadlines, bullets, testimonials, and closing CTAs. Compare technical vs. storytelling approaches, long-form vs. short-form, and different formatting styles.

How to Set Up Effective Copy Variation Tests

Start with a clear hypothesis: don't change words at random. Base variations on user data, customer feedback, or support questions. For example, if customers ask "how long does shipping take," test adding that info prominently.

Split your audience randomly and evenly; verify the traffic distribution is correct (50/50 or chosen split). Run variations simultaneously—avoid showing them at different times because weekday/weekend behavior affects outcomes.

Define success metrics before launching. Email tests: track open and click rates. Landing pages: conversion rate and time on page. Ads: CTR and cost per conversion. Tools like HubSpot, Google Optimize, and VWO help track these, but your success metric must be decided in advance.

Statistical significance is non-negotiable. Short tests with small samples produce untrustworthy results. Run tests for at least 30 days or until you hit 95% confidence. Patience prevents implementing false positives.

Best Practices for Text A/B Testing Success

Golden rule: test one variable at a time. If you change headline, CTA, and body together, you won't know what caused any lift. Define clear goals—clicks, conversions, engagement, or bounce reduction—so you measure the right metrics.

Document everything: hypothesis, variations, dates, sample sizes, and outcomes. Build a knowledge base so you don't re-test what you already learned and so new team members can get up to speed.

Avoid SEO mistakes: use 302 redirects for temporary URL redirects, add rel="canonical" tags to indicate the main page, and never cloak content to search engines. Follow Google's guidance to prevent ranking penalties.

Common Copy Elements That Drive Conversion Lift

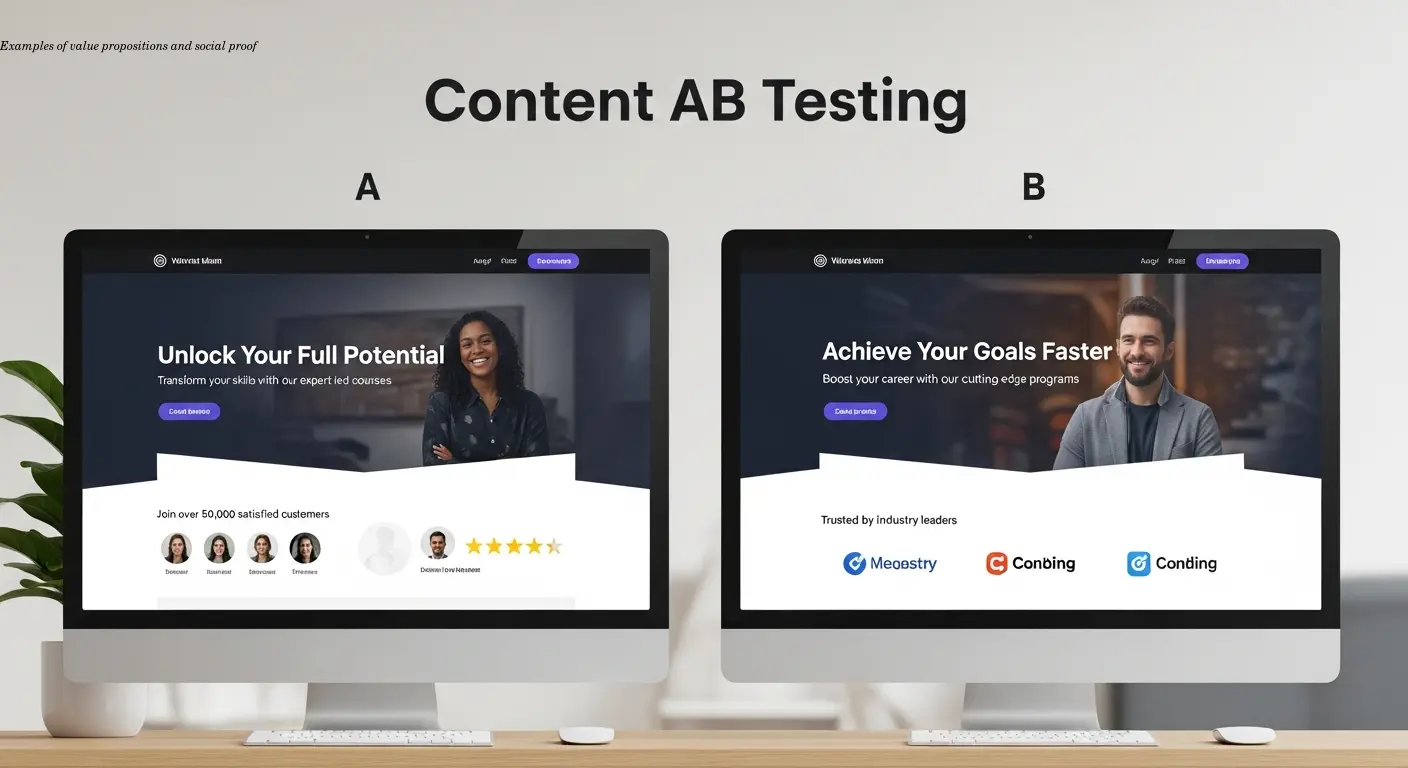

Value propositions that are specific win. Compare "Create Images with AI" against "Create Images 5x Faster and Cheaper with AI"—the second communicates a concrete benefit. Test multiple ways to express your core value.

Social proof (testimonials, user counts, trust badges) performs differently depending on wording and specificity. Try "Join 50,000+ marketers" vs. "Trusted by thousands" and test placement and length.

Urgency and scarcity can boost conversions when authentic: "Limited time offer" vs. "Sale ends Friday" vs. none. Use real urgency—fake timers and false scarcity erode trust.

Question-based headlines ("Want to 10x Your Email Open Rates?") create curiosity, educational headlines ("How to 10x Your Email Open Rates") teach, and direct headlines ("10x Your Email Open Rates") command action. Test systematically to see what your audience prefers.

Tools and Platforms for Running Copy Tests

Google Optimize is a solid free starting point and integrates with Google Analytics—useful for testing headlines, CTAs, and simple page copy via visual editor. Note: Google Optimize is being sunset; Google Analytics 4 is adding testing capabilities.

HubSpot offers built-in A/B testing for emails and landing pages with automatic significance reporting—convenient if you're on the HubSpot platform (paid features usually required).

Optimizely and VWO serve enterprise needs: complex multi-page tests, personalization, multivariate tests, and advanced targeting. They require more setup but provide greater control.

Email platforms like Klaviyo, Mailchimp, and ActiveCampaign include native A/B testing for subject lines and email bodies, often with an option to automatically send the winning variant to the remainder of the audience.

Analyzing Results and Implementing Winners

Read results holistically. A winning variation on clicks may worsen conversion quality. Check secondary metrics: time on page, bounce rate, and average order value. Sometimes the "loser" brings higher-value customers.

Understand statistical significance: 95% confidence means only a 5% chance results are random. Don't call tests early; let them reach significance to avoid false conclusions.

Implement winners systematically across channels—if a headline wins on a landing page, test similar language in ads, emails, and social. Build a style guide from winning tests so teams can apply proven language consistently.

Keep testing even after finding a winner. Preferences change, competitors shift, and audience behavior evolves. Set a cadence to revisit high-traffic pages and campaigns every few months.

Building a Sustainable Testing Program

Prioritize high-traffic, high-impact pages and campaigns. A 10% lift on a page with 100,000 visitors is worth more than a 50% lift on a page with 500 visitors. Estimate potential value before committing resources.

Maintain a testing calendar balancing quick wins (headline swaps) with longer experiments (full landing page overhauls). Running 2–3 tests simultaneously across channels typically works well for most teams.

Create a hypothesis library where anyone can submit ideas based on customer conversations, surveys, and analytics. Involve support and sales teams—they hear objections and conversion drivers every day.

Share results broadly to build buy-in. When copy teams see measurable lifts and executives see ROI improvements, resources flow to optimization and a data-driven culture takes root.

FAQ

How long should I run an A/B test for content?

Run tests for at least 30 days or until you reach 95% statistical significance, whichever comes first. Low-traffic pages may need 60–90 days to collect sufficient data.

Can I test multiple copy variations at once?

Yes—this is multivariate testing and requires much more traffic to reach significance. If you have 100,000+ monthly visitors you can test 3–4 variations; for most sites, stick to A/B with two versions.

What's a good conversion rate improvement to expect from copy testing?

Typical improvements range from 5–30% for winning variations, though some tests show no significant difference. Focus on cumulative impact across multiple tests rather than huge wins every time.

Should I test copy on mobile and desktop separately?

Absolutely. Behavior differs by device—test separately or segment results by device type to understand what works for each audience.

How do I know which copy element to test first?

Start with high-impact elements: headlines, CTAs, and email subject lines. Use analytics to find high-traffic pages with low conversion rates—those are prime candidates.

Can A/B testing hurt my SEO rankings?

Not if you follow best practices: use 302 redirects for temporary tests, add rel="canonical" tags, and never cloak content. Properly implemented tests should not harm rankings.

What sample size do I need for reliable results?

It depends on your baseline conversion rate and the improvement you want to detect. Many calculators recommend 350–400 conversions per variation; at a 2% conversion rate that can mean ~20,000 visitors per variation.

Should I test everything or just major changes?

Prioritize changes with meaningful potential impact. Tiny tweaks like "the" vs. "a" are rarely worth testing unless you have massive traffic. Focus on impact and traffic to maximize learning efficiency.

Related Articles

Building an Autonomous AI News Agent with n8n & Bright Data (Part 1)

Learn how to build an autonomous AI-powered news scraper using n8n and Bright Data. Part 1 covers the Ingestion Engine architecture for 24/7 market monitoring.

Practical content migration guide for CMS comparison

Practical content migration advice to compare old and new CMS, validate data integrity, and avoid SEO loss. Best practices for developers and content teams.

Collaborative Writing Guide to Comparing Team Contributions

Compare team contributions with collaborative writing tools, clear roles, and peer assessment—practical tactics content teams can apply now.