Understanding Large Concept Mode and Its Impact

Understanding Large Concept Models (LCMs) and How They Differ from LLMs

Estimated reading time: 12 minutes

Key Takeaways

- Large Concept Models (LCMs) work on concepts or sentences, unlike Large Language Models (LLMs) that handle individual tokens or words.

- LCMs offer better reasoning, coherence, and language-independence but are still in early research stages.

- LLMs have proven success and token-level precision but struggle with hallucinations and long-context coherence.

- The practical impact of LCMs is promising for future AI, especially in areas requiring deep understanding, like AI customer support.

- Future AI likely involves hybrids that combine strengths of both approaches.

Table of Contents

- 1. What is Large Concept Model (LCM)?

- 2. How LCMs Work: The Core Architecture

- 3. LCM vs LLM: The Key Differences

- 4. Strengths of Large Concept Models

- 5. Weaknesses of Large Concept Models

- 6. Strengths of Traditional Large Language Models

- 7. Weaknesses of Traditional LLMs

- 8. Future Outlook: Where Are LCMs and LLMs Headed?

- FAQ

1. What is Large Concept Model (LCM)?

Large Concept Models, or LCMs, represent a fresh way AI processes information. Instead of looking at text piece by piece, like words or tokens, LCMs zoom out and treat whole sentences as single meaningful units, or "concepts". This means it works with bigger chunks of ideas, kinda like grasping the gist instead of every tiny detail - source: debabratapruseth.com.

The process starts by splitting input into sentences. Then, these sentences are converted into embeddings through a method called SONAR, which maps sentences into a special space where their meanings can be easily compared or manipulated. LCMs then predict the next concept, not the next word, which is a big shift from traditional language models. After processing, they convert these conceptual embeddings back into language or even other formats like images or sounds - source: infoq.com.

I remember testing an early LCM demo recently and was kinda impressed by how it seemed to keep the bigger picture better than usual chatbots I've used. It's early days, true, but it kinda felt like the AI was thinking in ideas, not just word chains.

Even though the research is fresh (LCMs appeared late 2024), their approach hints at possible changes in how AI understands and generates language and beyond.

2. How LCMs Work: The Core Architecture

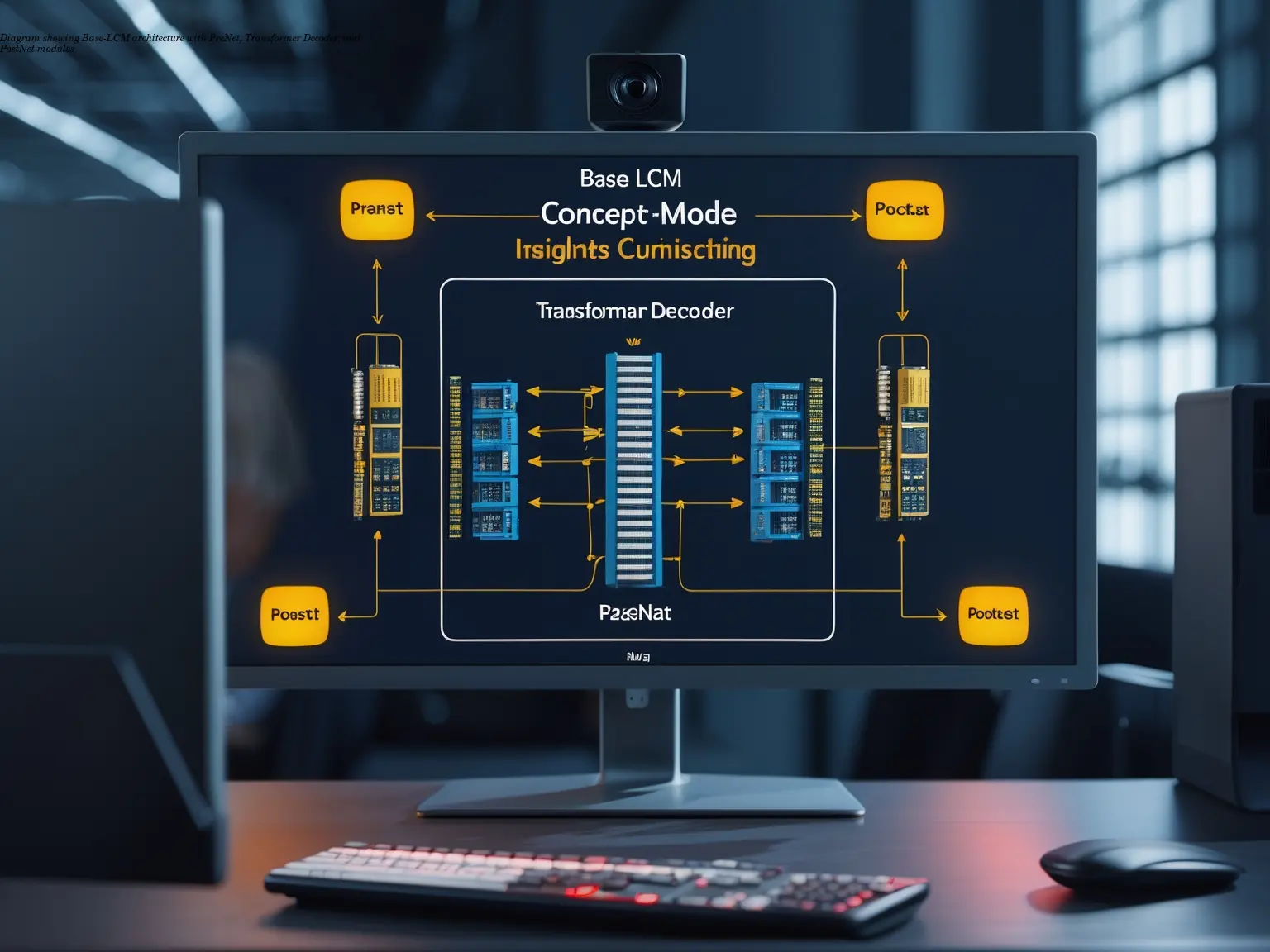

LCMs have some neat parts making their unique approach possible. The classic design, known as Base-LCM, involves three key modules:

- PreNet: It tweaks the concept embeddings to prepare them for the next step.

- Transformer Decoder: This looks at relations and dependencies between concepts to generate the next idea.

- PostNet: It takes output from the transformer and maps it back to the concept space.

This whole pipeline differs from LLMs that predict the next word based on previous words. Here, LCMs predict the next concept based on prior concepts, so the model thinks in a more structured way - source: infoq.com.

LCMs also can use diffusion-based designs inspired by image generation techniques, which improve how they create concepts over time. This variant's kinda more experimental but shows how versatile the concept-level approach really is.

One thing people often overlook – this architecture lets LCMs be language-agnostic because the model deals with meaning instead of specific words or grammar rules. This means it could handle French, Chinese, or even non-text data with less tweaking.

A practical story from AI customer support: a colleague shared how LLM-based chatbots sometimes missed customer intent because a few words changed the meaning, whereas concept-focused processing could reduce those errors. LCMs might tackle this issue nicely down the road.

3. LCM vs LLM: The Key Differences

You probably know Large Language Models (like GPT, BERT) pretty well. They analyze text word by word, or token by token, to generate responses. LCMs, on the other hand, look at chunks of meaning, entire sentences or bigger concepts. Here’s a simple comparison:

| Aspect | Large Language Models (LLMs) | Large Concept Models (LCMs) |

|---|---|---|

| Processing Unit | Words or tokens | Whole sentences (concepts) |

| Focus | Predict next word/token | Predict next concept |

| Reasoning | Based on word associations | Concept-level reasoning |

| Abstraction | Low (word-level) | High (concept-level) |

| Language Dependency | Usually language-dependent | Mostly language-agnostic |

This means LLMs need lots of data for each language, sometimes struggling when context gets long. LCMs hold more info in fewer pieces, which might let them keep track better over time or even cross languages easier.

But on the flip side, LLMs work with precise word tweaks, useful in customer support when a single word changes meaning dramatically. So, they're not out of competition just yet.

4. Strengths of Large Concept Models

Working with bigger concepts brings some useful advantages:

- Better Reasoning: LCMs understand ideas and context more deeply rather than just predicting probable next words - source: staituned.com. This helps in complex conversations like tech support or troubleshooting AI, where understanding intent matters.

- Language and Modality Flexibility: Because LCMs encode sentence meaning rather than surface language, they could work across languages and even other data types like images or audio. This opens new doors for multilingual or multi-modal customer support systems.

- Coherence Over Long Texts: By working with concepts, LCMs are less likely to wander off-topic in longer conversations, keeping chatbots on point.

- Reducing Hallucinations: Current LLMs sometimes make stuff up, called hallucinations, especially on vague prompts. LCMs can help by focusing on consistent concepts, which stabilizes output.

- Toward AGI: Some experts think LCMs can take us closer to human-like AI understanding, a big deal for future customer support automation where simple yes/no or short replies won’t cut it - source: debabratapruseth.com.

Though exciting, it’s worth saying the tech still needs more testing and real-world runs before becoming mainstream.

5. Weaknesses of Large Concept Models

Despite these perks, LCMs aren’t without their current downsides:

- In Early Development: LCMs are research-level tech as of mid-2024, not fully baked products. That means commercial usage is limited and experimental.

- Computational Load: Handling whole concepts with complex encodings can demand more computing power compared to token-based LLMs.

- Training Difficulties: Teaching a model to understand and predict concepts accurately is a tricky task. Unlike tokens, concepts can be fuzzy or context-dependent, making training datasets and methods harder to perfect.

- Limited Tooling and Ecosystem: Unlike LLMs backed by years of community and software tools, LCMs lack mature infrastructure.

For example, in AI customer support setups I've seen, deploying bleeding-edge models like LCMs can slow things down and increase costs, which is tough to justify when existing LLMs handle many common cases pretty well.

6. Strengths of Traditional Large Language Models

LLMs have dominated AI text generation for good reasons:

- Proven Effectiveness: They've powered huge advances in translation, summarization, customer chats, and creative writing.

- Rich Ecosystem: A vast range of pre-trained models, APIs, and tools exist, allowing rapid integration into apps.

- Token-Level Precision: This helps with language quirks, wordplay, and exact phrasing, necessary for precise customer support replies or legal contexts.

- Fast Inference: With many optimization tricks, LLMs deliver results swiftly, a must-have in real-time customer interactions.

An AI support expert once told me LLMs still provide the best off-the-shelf solutions for chatbot building today, especially when paired with human-in-the-loop designs.

7. Weaknesses of Traditional LLMs

But LLMs aren't perfect:

- Hallucinations: They can confidently produce false or misleading info, a big risk in customer support where trust matters - infoq.com.

- Shallow Reasoning: Because LLMs predict word sequences statistically, they sometimes miss deeper intent or cause confusion on complex queries.

- Short Context Memory: They struggle keeping track of very long chats or documents, so conversations may lose thread or contradict earlier statements.

- Language Dependence: Many LLMs perform best in English, requiring additional tuning for other languages.

You’ll often see this in AI helpdesks where bots misunderstand multi-turn conversations or provide inconsistent advice.

8. Future Outlook: Where Are LCMs and LLMs Headed?

LCMs hint at a shift from treating language as sequences of words toward thinking in ideas and concepts. Though it's early, this could transform AI customer support fundamentally. Imagine bots that truly "get" what you mean even with slang or ambiguous requests, across multiple languages—all while staying consistent.

In the near term, I expect hybrid models combining the precision and tooling of LLMs with LCM’s reasoning strengths. This mix could power advanced AI helpdesks that handle simple questions quickly but escalate tougher problems to concept-aware systems.

AI developers and businesses looking to future-proof their customer support should watch LCM research closely, but keep relying on established LLM tech for now.

As an AI enthusiast who’s worked both with bots and human teams, I’m hopeful LCMs will bring closer collaboration between humans and machines, making support faster and less frustrating.

FAQ

Q: What exactly is a large concept model?

A: It’s an AI model that processes whole sentences or ideas (concepts) instead of focusing on individual words, allowing deeper understanding.

Q: How do LCMs differ from LLMs fundamentally?

A: LCMs predict the next "concept" or sentence, while LLMs predict the next word or token, leading to differences in reasoning and context-handing.

Q: Are LCMs better than LLMs?

A: Not necessarily better yet. LCMs show promise in reasoning and coherence but are early-stage. LLMs are mature and reliable right now.

Q: Can LCMs be used for any language?

A: They are designed to be language-agnostic by focusing on concepts, which potentially allows better multilingual capabilities.

Q: Will LCMs replace LLMs?

A: More likely, they will complement each other, or hybrid models will emerge leveraging the benefits of both.

Q: How does this affect AI customer support?

A: LCMs could improve chatbots' understanding of customer intent and reduce wrong responses, resulting in smoother experiences.

Related Articles

Building an Autonomous AI News Agent with n8n & Bright Data (Part 1)

Learn how to build an autonomous AI-powered news scraper using n8n and Bright Data. Part 1 covers the Ingestion Engine architecture for 24/7 market monitoring.

Practical content migration guide for CMS comparison

Practical content migration advice to compare old and new CMS, validate data integrity, and avoid SEO loss. Best practices for developers and content teams.

Collaborative Writing Guide to Comparing Team Contributions

Compare team contributions with collaborative writing tools, clear roles, and peer assessment—practical tactics content teams can apply now.